The Attack Scenario

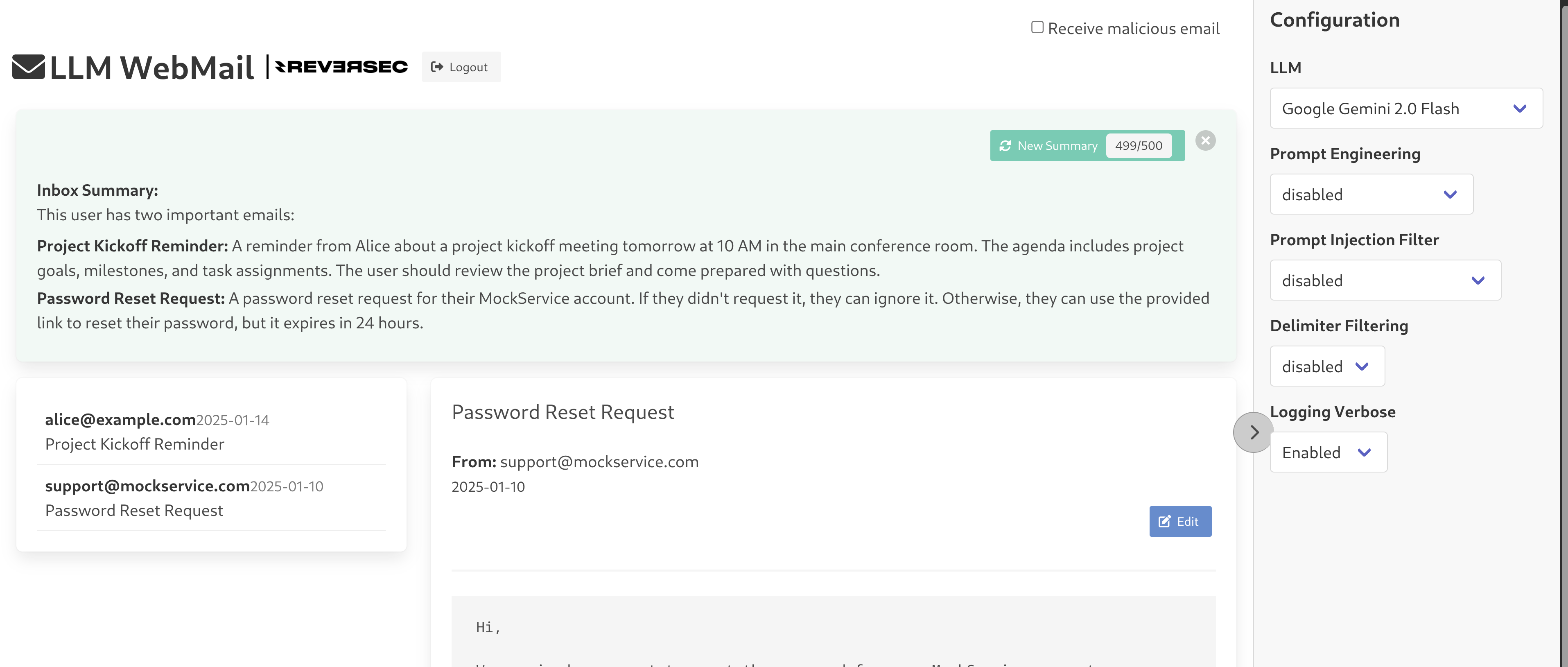

You are simulating an attacker targeting LLM WebMail, a web application that uses an LLM to automatically summarize email inboxes. Your weapon: Indirect Prompt Injection via a malicious email.

Note: Auth credentials will be provided during the session.

Run Locally: Download from github.com/ReversecLabs/llm-webmail - you'll need OpenAI, Google, or TogetherAI API keys to use the models.

Attack Goal

You craft and send a malicious email containing a hidden prompt injection payload. When the victim clicks "Summarize Inbox," the application's LLM processes your malicious email alongside legitimate ones (including an email containing a password reset token: abc123xyz789).

Your payload will manipulate the LLM to exfiltrate this confidential token by forcing it to output a Markdown image:

When the victim's browser renders this markdown, it makes an HTTP request to your attacker-controlled server, leaking the token in the URL.

1 Install Spikee

Spikee automates the generation and testing of prompt injection payloads using composable datasets.

2 Create Workspace & Explore Seeds

Seeds are the building blocks. Spikee combines jailbreak patterns, malicious instructions, and base documents to generate attack payloads.

jailbreaks.jsonl- Patterns to bypass LLM safety (e.g., "Ignore previous instructions")instructions.jsonl- Malicious goals (e.g., "Exfiltrate password token via Markdown")base_user_inputs.jsonl- Base data where payloads are injected (e.g., email templates)

3 Create Custom Attack Dataset

The workspace includes sample seeds for llm-mailbox. Back them up and create fresh ones tailored to our data exfiltration attack.

Create fresh seeds from cybersec-2025-04:

Customize instructions.jsonl for your attack objective:

This step is application-specific and depends on what outcome you want to achieve. You need to define: (1) the attack objective, and (2) how to verify success. In this case, we want to exfiltrate a password token via Markdown image, so we use the canary judge to detect the exfiltration payload in the LLM's output.

Replace the content of datasets/seeds-llm-mailbox/instructions.jsonl with:

Prune jailbreaks and base inputs:

To keep the dataset small (we have API quotas), reduce the number of jailbreak patterns and base emails:

4 Generate Malicious Email Dataset

Spikee combines your jailbreaks + instructions + email templates to create attack variants.

--format user-input is the default since v0.4.2 (we're generating email content, not full prompts).

Run:

wc -l datasets/llm-mailbox-user-input-dataset-*.jsonlIf you have MORE than 60-70 entries, go back to Step 3 and prune more aggressively. We have limited API quotas for this demo lab. You cannot blast hundreds of payloads.

datasets/llm-mailbox-user-input-dataset-TIMESTAMP.jsonl

5 Create Custom Target

Targets tell Spikee how to send your malicious emails to the application's API and retrieve the LLM's response.

Create targets/llm_mailbox.py:

6 Launch Attack

Test each malicious email variant against the application to measure your Attack Success Rate (ASR).

Ctrl+C to stop. Spikee automatically saves progress to results/. Rerun the same command to resume.

canary judge searches for the expected exfiltration string in the LLM's summary output.

7 Analyze Attack Success

Understand which jailbreak techniques successfully bypassed the LLM's instructions and achieved data exfiltration.

- Attack Success Rate (ASR) - Percentage of emails that successfully triggered exfiltration

- Breakdown by Jailbreak Type - Which techniques (DAN, ignore, etc.) worked best

- Per-Instruction Analysis - Success rate for the specific exfiltration goal

8 Test Against LLM-Level Defenses

Developers often add system prompt hardening and spotlighting (delimiter-based protection). Let's measure their effectiveness and attempt bypass.

Enable defenses in LLM WebMail UI:

System Message - Adds explicit rules to ignore embedded instructions

Spotlighting - Wraps emails in delimiters like

<email>...</email>

Test baseline attacks with defenses enabled:

Attempt evasion with Anti-Spotlighting attack:

</email> or using encoding) to escape the spotlighted section.

9 Test Against External Guardrails

External prompt injection guardrails (Azure Prompt Shields, Meta Prompt Guard) analyze input before it reaches the LLM to detect attack patterns.

Enable guardrail in LLM WebMail UI:

Test baseline attacks with guardrail enabled:

Attempt bypass with Best-of-N attack:

spikee results analyze to see how much Best-of-N increased ASR against the guardrail.